AI adoption is changing how we live and work, so we need clear rules to ensure we use it wisely.

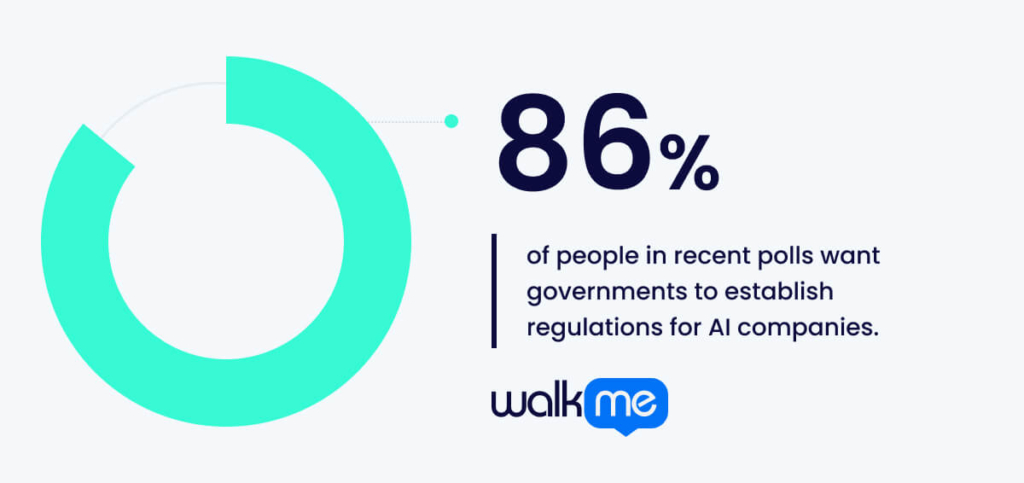

Recent polls show that 86% of people want governments to set rules for AI companies. Establishing AI ethics helps create AI that works for everyone. These rules help protect people and make AI systems fair by reducing bias.

If we don’t have strong ethical guidelines in place, AI’s problems might outweigh its benefits. Implementing ethics is needed in the modern world, where AI affects us all.

This article will explore the concept of AI ethics, its importance, key stakeholders, best practices, challenges, and benefits, offering a comprehensive overview of responsible AI development.

What is AI ethics?

AI ethics is the set of principles that guide the responsible use of artificial intelligence.

AI ethics create rules for using AI in ways that benefit everyone. They ensure that AI systems are fair and open and respect people’s rights. These rules help stop unfair treatment, protect private information, and enhance AI security.

Responsible ethics find the right balance between new technology and keeping people safe. This balance is crucial since AI is now part of our daily workflow.

When companies follow ethical guidelines, people trust AI more, and it causes fewer problems. This helps AI work better for diverse people and groups in our society.

The importance of AI ethics

As our world increasingly relies on automation, having suitable ethics for AI is important.

AI makes decisions that affect people’s lives, so we need guidelines to prevent misuse, risks, and invasion of privacy. These ethics rules ensure that AI is transparent, accountable, and fair.

This helps people build digital trust and ensures it’s developed correctly. Ethical rules also ensure AI benefits everyone in society, not just the few.

Without these standards, AI could increase unfair biases and risks. That’s why it’s crucial to have principles that protect everyone as AI becomes a bigger part of our lives.

Who’s responsible for AI ethics?

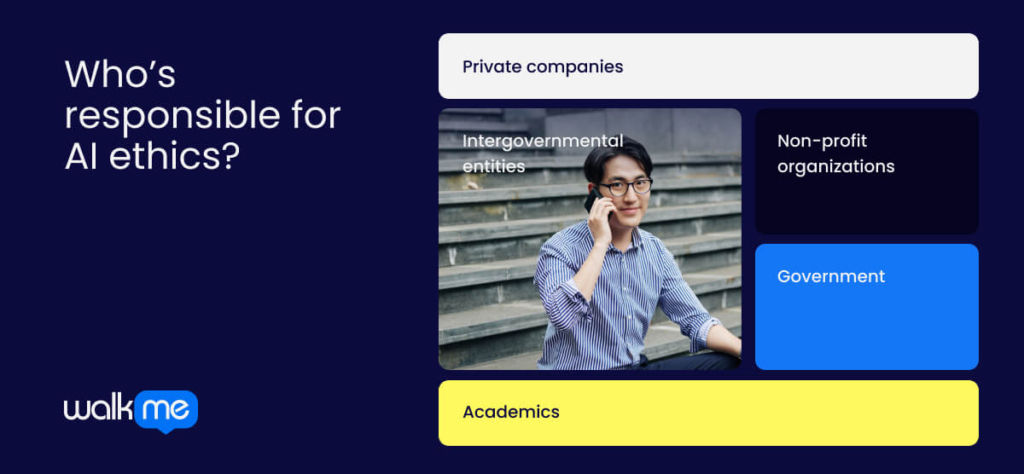

Many groups play a role in AI ethics. This includes academics, governments, and private companies that create and enforce rules for using AI.

Each plays their part in shaping ethical standards and enforcing accountability. Working together, these groups ensure AI benefits everyone. It takes cooperation across many fronts to ensure this technology is used correctly.

Let’s take a look at the key stakeholders for ethical AI:

Private companies

Private companies are also vital in implementing AI ethics directly in their products and services.

Adopting ethical guidelines ensures their AI systems are fair and protect privacy. This prevents harm and builds public trust that drives responsible innovation.

Intergovernmental entities

International groups like the UN and EU promote ethical AI across borders. They set standards beyond any single country’s policies. These organizations tackle global AI challenges, like privacy and security, that cross borders. Their work keeps ethics consistent and prevents misuse across nations.

Non-profit organizations

Nonprofit groups are also important for AI ethics. They raise awareness, conduct research, and influence policy to advocate for ethical AI. These watchdogs highlight risks and push for more accountability. Their focus is ensuring AI benefits everyone, not just a few.

Government

Governments are also critical players in AI ethics. They make rules and enforce them to address issues like AI privacy, fairness, and accountability. Government action ensures AI development matches societal values, helping build trust and confidence in wider AI use.

Academics

Academics play a key role in AI ethics. They study how to use AI responsibly and its effects on society. Their work provides the evidence that guides AI policy. Academics also teach future leaders about the ethical challenges of AI as technology evolves.

How to establish AI ethics

Ethical AI needs clear guidelines. This section shows the best ways to develop responsible AI. Following these standards helps organizations protect privacy and earn public trust.

Let’s take a look at how to establish AI ethics:

Assess AI’s impact

Understanding how AI products affect users and society is crucial for identifying potential AI risks. Companies can examine these factors to ensure that AI aligns with expectations. This helps guarantee that AI is used to benefit everyone and meet society’s expectations. Understanding these impacts ensures AI is used ethically and responsibly.

Share values

Clarifying a company’s values and ethics for AI builds trust and sets the stage. Companies must ensure people understand their ethical approach by showing honesty, fairness, and responsibility. This helps guide choices that follow these values and ensures ethical decision-making.

Open about AI

Being open about how AI works helps build trust. People need to understand the technology and feel good about it. Using open-source tools and sharing information can help make sure AI meets ethical standards. Being open about AI’s processes helps encourage trust and understanding.

Fixing AI bias

It’s important to fix AI bias so it works fairly for everyone. Finding and correcting bias in data, how AI works, or the results it gives helps ensure everyone’s treatment is equal. Identifying and addressing bias is crucial for addressing AI legal issues.

Checking AI risks

Checking for risks often helps companies monitor ethical issues and make changes. Finding new risks, like unintended bias or security problems, lets people fix them before they cause disruption. This helps ensure that AI keeps growing responsibly. Regular risk assessments are essential for the responsible development of AI.

Examples of AI ethics

Understanding real-world examples of AI ethics is crucial. These examples show how transparency, fairness, and accountability affect AI’s impact on society.

Let’s take a closer look at some examples of AI ethics.

Putting people first

When AI systems are used in healthcare, like AI diagnostic tools, the most important thing is ensuring they help patients and respect their rights. These AI tools assist doctors by providing information to help them treat patients better. Ethical AI design focuses on respecting each patient’s unique needs and situation.

For example, AI should avoid methods that make patients uncomfortable and always get the patient’s permission before using them. This helps build trust between patients and the technology.

Making AI clear and explainable

It’s important for AI systems that greatly impact people’s lives to be transparent and easy to understand. Take credit scoring AI, like what companies like Experian use. These systems make decisions that affect people’s finances. To be ethical, the companies behind these AIs must clearly explain how their systems work.

By providing clear explanations, people can better understand AI’s decisions. This openness also allows people to question the AI or appeal the decisions if they disagree with them. Transparency also helps build trust in the technology.

Holding companies responsible

When companies develop AI, they must be responsible for how that technology affects people. Microsoft has a special team that checks its AI products to ensure they don’t cause problems or harm people.

This accountability is really important—it means the company is carefully monitoring its AI and will fix issues that come up. Responsible oversight like this helps maintain public trust because people know individuals are liable for ensuring the AI is used ethically and safely.

Removing bias and discrimination

AI systems can sometimes make unfair decisions that discriminate against people based on factors like gender or race. This is a big problem, especially for AI used in hiring. Companies have to work hard to identify and remove these biases from their AI.

LinkedIn, for example, regularly tests and adjusts the algorithms in their job-matching AI to reduce gender and racial biases. By fixing the data and algorithms that feed into the AI, companies can create fairer, more equal opportunities for everyone.

Protecting privacy and data rights

When AI systems use people’s data, this information must be kept safe and private. Apple’s health apps are a good example of ethical data practices. These apps securely store people’s health information and give users control over their own data.

Features like encryption and local data storage on the user’s device mean people’s private details are protected from misuse. This shows Apple respects users’ rights to privacy and control over their personal information.

What are the benefits of ethical AI?

Understanding the benefits of ethical AI is vital. It helps people trust new technologies and encourages companies to innovate responsibly. Most importantly, it ensures that technology matches society’s values, building a fairer and sustainable future for everyone.

Let’s take a closer look at the benefits of ethical AI:

Greater customer trust

When companies are clear about how their AI works, customers trust them more. For example, Starbucks explains how it uses AI to suggest drinks to customers. Being open like this makes customers feel safer using their service. When customers trust a company more, they keep returning, increasing ROI.

Better brand reputation

Using ethical AI can improve a brand’s reputation. For example, Salesforce protects customer data and is open about how it uses AI. This approach helps customers trust the company more. When businesses align AI with their values, they attract people who care about ethics, building stronger, long-lasting loyalty.

Higher employee satisfaction

Using AI fairly makes employees happier. Companies that use AI to hire people without unfair bias create workplaces with all kinds of people. This makes employees feel respected, which helps them work better. When companies treat people fairly, both workers and the company benefit.

Responsible business practices

Ethical AI helps companies focus on helping society, not just making money. Companies like Microsoft use AI in ways that support communities. This creates a company culture that cares about doing what’s right, which helps make society better.

Lower risk of legal issues

Ethical AI can help avoid legal problems like treating people unfairly or misusing personal information. Companies regularly checking their AI for bias can find and fix problems early. Following regulations and doing what’s right protects companies from expensive lawsuits and damage to their reputations.

What are the challenges of ethical AI?

Recognizing the challenges of ethical AI is essential. It highlights areas where trust in technology can falter and helps companies address risks. Understanding these challenges helps ensure AI aligns with society’s values, minimizing harm.

Let’s explore the key challenges of ethical AI:

Understanding AI decisions

One challenge of ethical AI is making its decisions clear to everyone. When AI makes important choices, like who gets a loan, people often don’t understand why. Ensuring people understand how AI makes decisions helps them trust and believe it’s fair.

Responsibility for AI actions

When AI causes problems, it’s hard to know who to blame. For example, if a self-driving car crashes, we need to figure out if it’s the fault of the people who made it, sold it, or used it. We need clear rules about who’s responsible when AI makes mistakes or causes harm.

Ensuring fairness

AI can accidentally treat some groups of people better than others. For instance, when AI helps choose who gets a job, it might favor certain people if it learns from unfair information. To keep AI unbiased, we must keep testing it and fixing problems so everyone gets the same opportunities.

Preventing misuse

A major challenge is stopping people from using AI in harmful ways. For example, AI can make deepfakes that look very real, which could hurt people. We need rules and safety measures to ensure AI is used to help people, not harm them.

Managing misinformation and copyright

AI can create content that looks real but isn’t, like fake news or copies of artists’ work without permission. This challenges us to make strict rules that protect content creators and ensure information stays truthful and reliable for everyone who uses it.

Now that you have a well-rounded overview of ethical AI, it’s important to understand which authorities are shaping and enforcing these standards.

Here is a list of authorities on developing ethical AI:

- ACET AI for Economic Policymaking: Looks at how AI can be used fairly to help make economic decisions across Africa.

- AI Now Institute: Studies how AI affects society, especially looking at how AI systems can be checked, workers’ rights, and privacy.

- AlgorithmWatch: Works to ensure AI systems support democracy and fairness by creating tools that show how they work.

- ASEAN Guide on AI: Helps Southeast Asian countries use AI in an ethical way.

- Berkman Klein Center: Studies how to make AI fair and create rules for using it properly.

- CEN-CENELEC AI Committee: Creates rules for responsible AI use in Europe, making sure AI systems are clear and reliable.

- CHAI (Center for Human-Compatible AI): Works with universities to create AI systems that follow human values.

- European Commission AI Watch: Keeps track of European AI development and provides advice on trustworthy AI.

- NIST AI Risk Framework: Helps organizations manage AI risks and use AI responsibly.

- Stanford Institute for Human-Centered AI: Develops AI that puts people first, especially in healthcare.

- UNESCO AI Ethics Guide: A worldwide guide for ethical AI use, created with input from many countries.

- World Economic Forum’s AI Guidelines: Provides practical steps for developing and managing AI responsibly.

Key takeaways on AI ethics

Understanding AI ethics is crucial as AI becomes a bigger part of our lives at work and home.

We need clear rules to ensure AI in information technology helps everyone and doesn’t cause problems like unfairness or privacy issues. Academics, governments, non-profits, and private companies all play important roles in creating these rules and ensuring compliance.

Fair practices, like being open about how AI works and checking for problems regularly, help people trust AI and encourage its responsible use. We must also tackle challenges like making AI decisions clearer and preventing misuse.

When we prioritize AI ethics, we create technology that aligns with what society believes is right, includes everyone, and improves our communities.

Working together and keeping conversations going between all these groups will help us use AI in ways that benefit everyone.

FAQs

The three ethical concerns about AI are unfairness, privacy, and responsibility. AI can accidentally treat some people unfairly, personal information might be misused, and it’s often unclear who’s responsible when AI makes mistakes or causes problems.

AI ethics are based on three main ideas: being open, fair, and taking responsibility. Being open means everyone should understand how AI works. Being fair means AI should treat everyone equally. Taking responsibility means someone must answer for what AI does. These three ideas help ensure AI is used in the right way.

The AI code of ethics is a set of rules that guide how we create and use AI. These rules say AI should be fair and clear about how it works, take responsibility for its actions, and protect people’s privacy. This code helps organizations use AI in ways that build trust and benefit everyone.