AI legal issues are creating significant challenges as the rapid growth of generative AI outpaces current legal frameworks. This results in a lack of clarity on critical matters like intellectual property, data privacy, liability, and most importantly, bias. Bias in AI systems can lead to discriminatory outcomes, making it the biggest legal issue in the AI landscape.

These unresolved legal issues expose businesses to potential intellectual property infringements, data breaches, biased decision-making, and ambiguous liability in AI-related incidents. This uncertainty can lead to costly legal battles and stifle innovation, leaving businesses and consumers vulnerable and hesitant to adopt AI technologies.

In this article, you’ll learn about the key AI legal issues, explore real-world examples, and discover practical solutions and strategies to navigate these challenges effectively.

What are AI legal issues?

AI legal issues are issues that can arise when you use artificial intelligence in a way that leads to your enterprise breaking laws and facing litigation.

Examples of AI legal issues include data leaks, misrepresenting information and using AI tools without permission or for unintended uses.

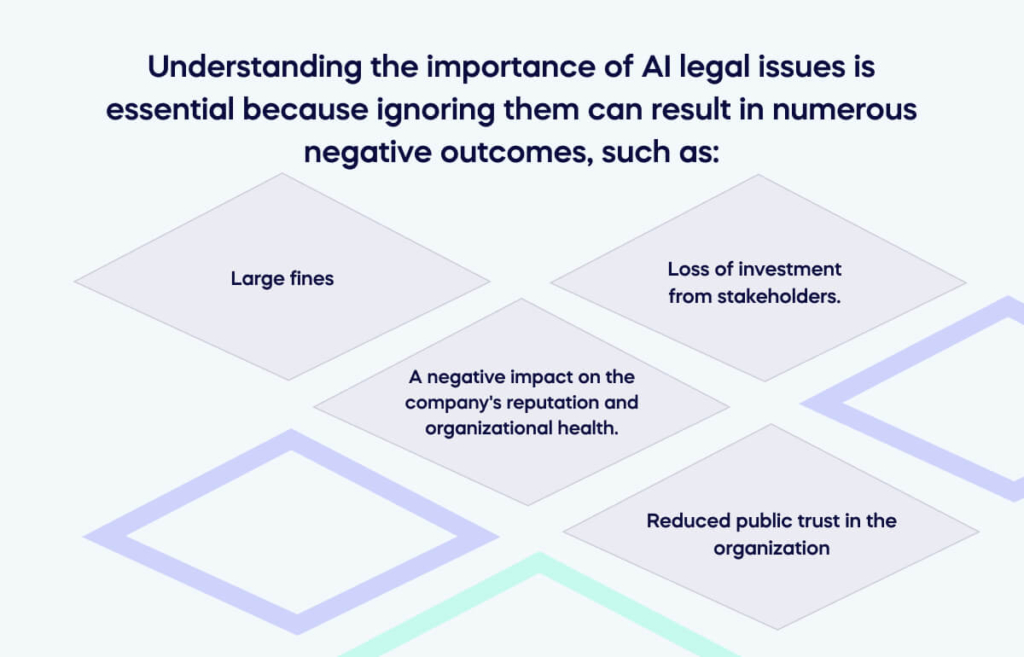

Moreover, understanding the importance of AI legal issues is essential because ignoring them can result in numerous negative outcomes, such as:

- Large fines.

- A negative impact on the company’s reputation and organizational health.

- Loss of investment from stakeholders.

- Reduced public trust in the organization.

By taking AI legal issues seriously, organizations can avoid these negative consequences and preserve their reputation and resources.

The 7 most common AI legal issues and how to deal with them

Familiarize yourself with the seven most common AI legal issues and how to deal with them to avoid litigation while reaping the benefits of AI.

1. Data and security leakage

If you include sensitive third-party or internal company information in ChatGPT, it becomes part of the chatbot’s data model, and others might access it through relevant queries.

This action risks data leakage and may breach an organization’s data retention polic due to AI security risks.

This key legal issue can even lead to national security risks if an organization has a relationship with the central government.

How to deal with it

Refrain from disclosing information about an upcoming product, such as confidential specifications and marketing strategies, that your team is helping a customer launch using ChatGPT.

Taking this precaution helps reduce the risk of data leakage and security breaches.

2. Intellectual property complexities

Determining ownership of code or text generated by ChatGPT can be intricate. According to the terms of service, the responsibility for the output lies with the input provider.

However, complications may arise if the output includes legally protected data from inputs not adhering to AI compliance practices brought on by intellectual property issues.

Copyright issues may surface if generative AI produces written material derived from copyrighted property, breaching AI compliance rules and increasing legal risk.

Imagine a scenario where a user asks ChatGPT for marketing material, resulting in an output that includes copyrighted content without proper attribution or permission.

This scenario poses a potential risk of infringing upon the intellectual property rights of the original content creators, leading to legal consequences and potential damage to the company’s reputation.

How to deal with it

Establishing robust documentation for AI development processes and sources is crucial. Implementing comprehensive tracking systems helps identify origin and compliance.

Collaborating with legal experts ensures alignment with existing intellectual property laws, reducing the risk of disputes.

4. Open-source license compliance

Imagine a scenario where generative AI utilizes open-source libraries and integrates that code into products.

This situation can potentially breach Open Source Software (OSS) licenses like GPL, causing legal issues for the organization.

For instance, if a company uses ChatGPT to create code for a software product, and the source of the GPT training data is uncertain, there’s a risk of violating terms in open-source licenses linked to that code.

Doing so could lead to legal complications, including allegations of license infringement and the possibility of legal action from the open-source community.

How to deal with it

To address AI open-source license compliance, organizations should meticulously review and document the source of AI training data.

Implementing effective tracking mechanisms, ensuring proper attribution, and obtaining legal guidance facilitate adherence to open-source licenses and mitigate potential compliance issues.

5. Confidentiality and liability concerns

Disclosing confidential customer or partner information can breach contracts and legal obligations.

Jeopardizing ChatGPT’s security exposes confidential content, creates risks, and puts the organization’s reputation at stake, resulting in legal liabilities.

Another risk involves staff using ChatGPT without proper training or IT approval and engaging in shadow IT or shadow AI practices. This makes monitoring and regulating the AI tool’s usage challenging.

Imagine a healthcare organization using ChatGPT for patient inquiries.

Sharing confidential patient details, such as medical records, with ChatGPT may violate legal obligations and infringe on patient privacy rights under laws like HIPAA in the United States.

How to deal with it

Addressing AI confidentiality and liability concerns requires robust security measures, including encryption and access controls.

Establishing clear policies on data handling and user training reduces risks.

Legal consultation ensures compliance, minimizing potential liabilities and safeguarding confidential information.

6. Unclear international law privacy and compliance

Exploiting the capabilities of generative AI, malicious actors can use it to create malware, generate content for phishing attacks and scams, and carry out cyber assaults using data from the dark web.

For instance, ChatGPT could work with bots to produce deceptive fake news articles and misleading content, fooling readers.

How to deal with it

Navigating AI’s unclear international law on privacy and compliance involves constant monitoring of evolving regulations.

Collaborate with legal experts to craft adaptable policies that align with international privacy standards.

7. Tort liability (bias)

AI usage exposes associations to potential liability issues.

If the AI produces inaccurate, negligent, or biased outcomes causing harm, the association may be liable for damages.

Hence, associations must ensure AI reliability and accuracy, vetting the work product for precision, veracity, completeness, and efficacy.

How to deal with it

Addressing AI tort liability involves establishing transparent guidelines for AI development, user education, and regular risk assessments.

Legal counsel ensures compliance with existing laws, minimizing potential liability risks.

8. Insurance

Associations must secure suitable insurance to address liability claims in these legal realms. Traditional nonprofit D&O liability and commercial general liability policies may be inadequate.

Exploring errors and omissions in liability/media liability insurance is crucial to filling coverage gaps.

How to deal with it

To resolve AI insurance issues, organizations should conduct thorough risk assessments, document AI systems’ development, and seek specialized insurance coverage.

Seek legal consultation to ensure comprehensive coverage aligning with evolving technological landscapes.

Which laws address AI legal issues?

Laws like the European Union’s GDPR (general data protection regulation) guide AI use and protect personal data to avoid AI legal issues.

GDPR requires careful treatment of personal data, ensuring it’s secure, confidential, and used correctly.

Organizations must take steps to safeguard data from unauthorized access, breaches, and cyber threats. Following GDPR and similar laws reduces privacy concerns and other AI legal issues.

Some legislation, such as the California Consumer Privacy Act (CCPA), guides other states on how to protect consumer data but not on how to use AI in the legal framework and only applies to for-profits in California.

The US is working on AI regulation and privacy laws. Until the US has its rules for key legal issues on generative AI tools and other AI tools, companies can follow GDPR for guidance on avoiding legal liability.

Protect yourself from litigation by avoiding AI legal issues

Avoid legal challenges by steering clear of AI-related issues.

Associations using AI should be careful to prevent possible legal problems caused by inaccurate or biased results.

It’s crucial to make sure AI systems are reliable and accurate. Also, get comprehensive insurance, as regular policies might not be enough.

Be cautious, protect your association, and navigate the AI world wisely to minimize risks.