As artificial intelligence becomes more popular for business, it becomes more important to use it correctly. AI has enhanced financial outcomes, employee experience, and the quality of services. But it also brings significant risks. These include data privacy concerns, security vulnerabilities, and potential misuse.

In response, companies can develop AI systems that promote societal good and business innovation. This goes beyond addressing bias. It also considers broader impacts on safety and privacy, and it is known as responsible AI.

Yet, the greater challenge lies in its effective implementation. So, this article details what responsible AI is, how it works and why it is important. It also looks at how you can implement it within your organization and its benefits and challenges. It will also discuss the difference between responsible AI and ethical AI.

What is responsible AI?

Responsible AI is about crafting, developing, and applying AI technologies with positive goals. These goals focus on enhancing the capabilities of employees and businesses. It also benefits customers and society. By doing so, companies can foster trust and expand their AI initiatives.

As organizations enhance their use of AI to gain business benefits, they must remain vigilant about regulation. You must take necessary actions to ensure you remain compliant, upholding responsible AI practices.

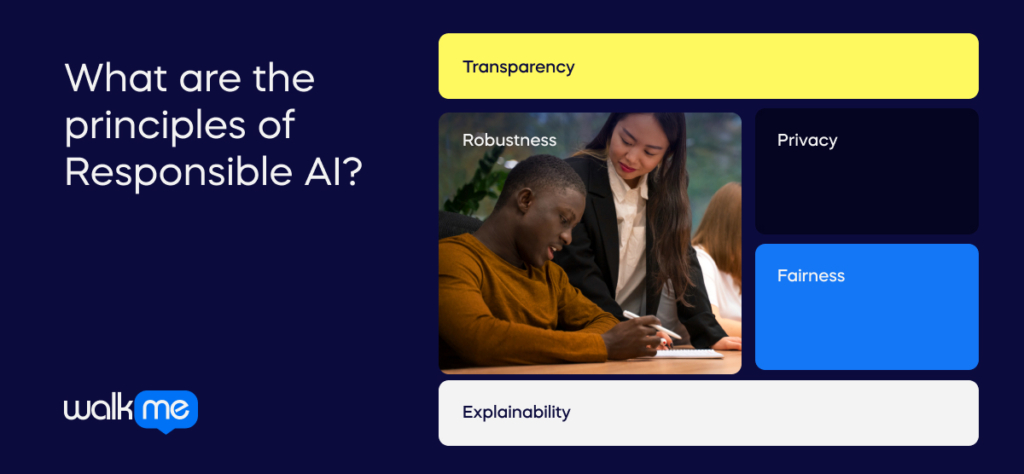

How does responsible AI work?/ What are the principles of Responsible AI?

To understand how responsible AI works, you need to be aware of these guiding principles:

Transparency

Transparent AI systems ensure you understand how decisions are made and outputs are generated. This includes clarity on data collection, storage, and usage. It also includes user-friendly interfaces and visualizations like saliency maps. Documentation, disclosures, and dashboards further enhance transparency. They do this by detailing the steps taken in AI development and tracking any associated risks.

Robustness

Robust AI can handle exceptional conditions and malicious attacks without causing unintended harm. It guards against vulnerabilities and ensures the security of confidential knowledge. Establishing security measures and mitigating risks are essential to protect AI models.

Privacy

Respecting privacy regulations and safeguarding personal data is crucial for responsible AI practices. Companies must put in place data governance frameworks to ensure data accuracy, security, and accessibility. Anonymization and aggregation techniques also help protect sensitive data. This preserves privacy while enabling model training.

Fairness

Addressing biases in AI models is essential to ensure fair outcomes, especially in decision-making. Diverse and representative training data help mitigate biases. Techniques like re-sampling and interdisciplinary team collaboration aid in identifying and rectifying biases.

Explainability

Explainability allows users to comprehend and trust AI results. You can achieve this through traceability and accuracy. Traceability documents data processing and model decisions, while accuracy ensures reliable predictions. Continuous learning enables practitioners to understand AI processes and conclusions. This builds trust and accountability.

Why is responsible AI important?

Responsible AI is important to ensure AI decisions are fair, ethical, and in line with current laws and regulations.

AI models often depend on data gathered from various sources. You can do this without the necessary permissions or using proprietary information. AI systems must manage this data in compliance with data privacy laws. They must also safeguard it against cybersecurity threats.

The issue of bias in AI is particularly pressing. You can build AI models upon data. But, any inherent biases or inaccuracies in this data can lead to skewed outputs. The complexity of AI algorithms makes this problem worse.

These algorithms are based on intricate mathematical patterns that can be too complex for experts to understand. This complexity often makes explaining why an AI model arrived at a specific outcome difficult.

For instance, if an AI recruiting tool shows bias against certain groups, it could impact the livelihoods of many individuals. Moreover, any violation of data privacy laws can put people’s personal information at risk. This is alongside the potential financial penalties the company may face.

Responsible AI provides a way forward. It offers a framework for companies to create and use AI technologies that are secure, trustworthy, and fair. This approach helps mitigate risks and ensures that AI benefits all.

By embracing a responsible approach, companies can achieve several important objectives. They can create AI systems that are both efficient and compliant with regulations. This approach also ensures that AI development considers ethical, legal, and societal considerations.

Additionally, it facilitates the tracking and reduction of biases in AI models. This contributes to building trust in AI technologies. Moreover, it focuses on preventing or minimizing the negative impacts associated with AI. Finally, it helps clarify accountability in situations where AI malfunctions or errors occur.

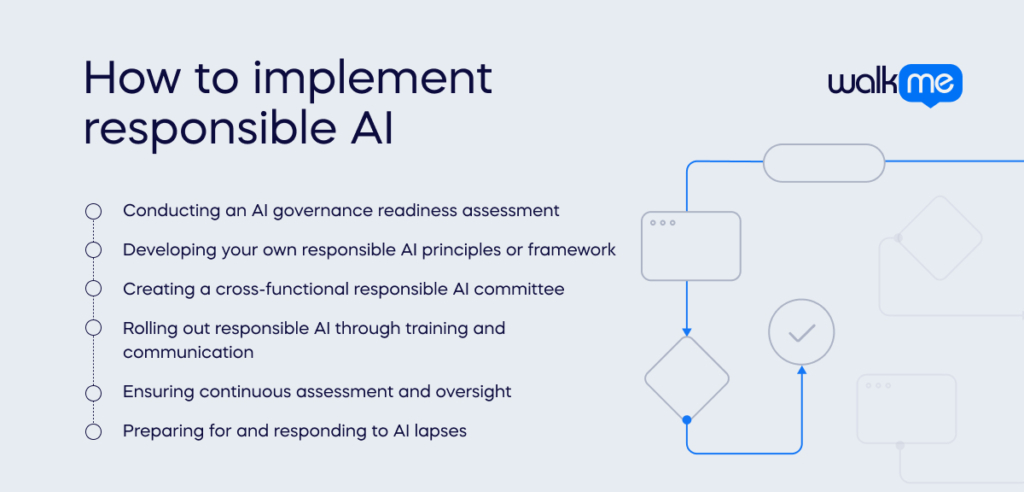

How to implement responsible AI

Implementing responsible AI practices within your organization involves:

Conducting an AI governance readiness assessment

Effective AI governance starts with an evaluation of an organization’s AI preparedness. This involves looking at how AI is currently utilized and planned for future use.

This step is crucial for ensuring a company’s vision of responsible AI matches up with its existing values. It also looks at the current business model and the potential risks associated with AI deployment. This process helps assess whether the current governance frameworks are robust enough to navigate the complexities of AI technologies.

Many organizations are now adopting the ‘three lines of defence’ model to manage the risks associated with AI. This strategic approach divides responsibilities across three distinct layers within the company. The first line has operational managers and employees involved in managing day-to-day risks. The second line has specialized risk management and compliance teams. They offer oversight and support.

The third line has internal audit functions. They offer independent assurance that risk management and governance processes are working.

Developing your own responsible AI principles or framework

Create a set of responsible AI principles that match the company’s overarching values and goals. These principles are the foundation for the organization’s responsible AI initiative. It guides decision-making and development processes.

A dedicated cross-functional AI ethics team is essential to bringing these principles to life. This team should include AI specialists, ethicists, legal experts, and business leaders. This ensures a holistic approach to ethical AI development.

Creating a cross-functional responsible AI committee

You need a diverse responsible AI committee to oversee the ethical implementation of AI technologies. This committee should tackle a range of complex ethical issues, from bias to unintended consequences of AI deployment. This includes members from different business units, regions, and backgrounds.

In this way, this group can reflect a broad spectrum of perspectives and experiences. This diversity is critical for navigating the ethical landscape of AI effectively.

Rolling out responsible AI through training and communication

Educating the wider organization about responsible AI is fundamental to its successful implementation. Employee training and communication efforts must go beyond the technical teams. It needs to include executive leadership and end-users of AI systems.

These programs should cover the importance of retraining AI models for accuracy. It should also highlight the importance of adopting effective data management practices. It should also focus on understanding the ethical implications of AI work. Embedding responsible AI practices across the AI development pipeline allows all team members to be aligned with the company’s ethical standards.

Ensuring continuous assessment and oversight

Maintaining the ethical integrity of AI systems requires continuous assessment for biases. You also need to look at ethical concerns and adherence to ethical guidelines.

Implementing mechanisms for human oversight and defining clear accountability lines are essential to this process. Continuous evaluation enables organizations to identify and address any ethical issues. This ensures AI systems remain aligned with the company’s ethical commitments.

Preparing for and responding to AI lapses

Acknowledging the potential for errors and preparing for AI lapses are critical components of responsible AI governance. Organizations must have a response plan in place to address any adverse impacts.

This plan should detail the actions needed to mitigate harm and correct technical issues. It should also communicate transparently with customers and employees about the incident.

Ensure that roles and responsibilities are clearly detailed. Develop, test, and refine these response procedures to reduce the negative consequences of any AI system failures. This can help safeguard both customers and the company.

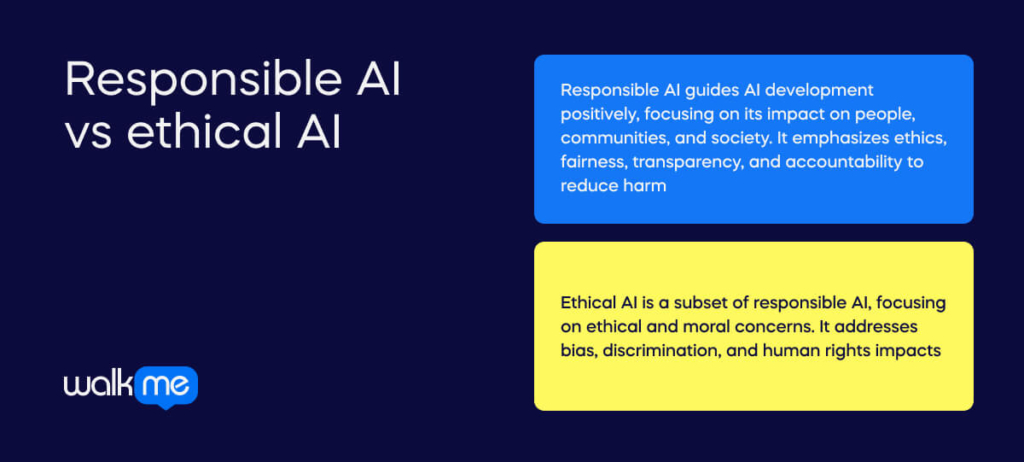

Responsible AI vs ethical AI

Responsible AI represents a broad approach that aims to steer the development of AI in a positive direction. It emphasizes evaluating and mitigating the impact of AI on people, communities, and society at large. This approach incorporates a variety of considerations. These include ethics, fairness, transparency, and accountability, all aimed at reducing harm.

But, ethical AI is a subset of responsible AI. It concentrates specifically on the ethical and moral concerns related to AI. It deals with addressing bias, discrimination, and effects on human rights.

Benefits of responsible AI

Responsible AI has several benefits which include:

Competitive advantage

In today’s evolving business landscape, responsible AI practices offer more than just a means to stay relevant. They present significant opportunities for building competitive advantages and expanding revenue streams.

Organizations can enhance their productivity by incorporating responsible AI principles into their operations. They can also strengthen their reputation, and gain a competitive edge in the market.

Enhanced productivity and reputation

Implementing responsible AI practices yields tangible benefits. These include improved products, enhanced talent retention, and better regulatory compliance readiness. Companies that embrace responsible AI early on can differentiate themselves their offerings.

They can also establish themselves as leaders in ethical and sustainable business practices. This fosters trust among stakeholders and bolsters their reputation. This can be an important driver of customer delight and brand preference.

Expanded customer base and engagement

Responsible AI initiatives can broaden an organization’s customer base and deepen engagement with existing clients. You can do this by utilizing inclusive data sets and transparent processes.

This allows businesses to cater to various customers. This enables them to attract and keep them. Furthermore, responsible AI practices enable companies to align their products and services more closely with user needs. This builds stronger and more enduring relationships.

Business procurement advantages

The emphasis on responsible AI is not limited to customer-facing activities. It also extends to business procurement processes. Corporate customers focus on ethical considerations when selecting technology vendors or partners.

As a result, companies that show a commitment to responsible AI stand to gain a competitive advantage. These organizations can enhance their credibility and strengthen their position in the market.

Market segmentation and differentiation

Responsible AI practices can also be a powerful market segmentation and differentiation tool. It allows companies to carve out specialized niches and target specific sectors or customer segments.

By leveraging their expertise in responsible AI, organizations can position themselves as trusted partners for clients with stringent compliance needs. This helps them beat less ethically focused competitors.

Long-term success through consumer demand

The success of responsible AI initiatives hinges on consumer demand. It also depends on the willingness to pay for ethically designed products and services. You should capitalize on this trend and deliver value through ethical practices. In this way, organizations can secure their long-term success and solidify their position as leaders in the market.

Risks of responsible AI

The various risks of responsible AI include not:

Understanding the evolving landscape of responsible AI

Responsible AI necessitates that AI systems are fit for their intended uses. It should remain relevant and acceptable within shifting societal, economic, and cultural contexts.

This adaptability requires organizations to track societal and cultural expectations. But, the capability to stay abreast of these changes is not universally available across all organizations.

Navigating new technological risks

Addressing the challenges introduced by new technologies goes beyond tackling technological questions. It includes dealing with issues of equity and societal impact. Leaders must recognize these enhanced challenges. To do this, they should focus on proactive attention, open disclosure, and effective communication.

Planning for the complexity of integrating third-party AI

Creating a responsible AI framework that manages the risks associated with third-party AI tool integration presents significant challenges. Assessing third-party AI tools demands a different approach than evaluating in-house AI systems.

This distinction requires more training and resources. Many organizations struggle to conduct detailed assessments of third-party AI solutions. This is because of resource constraints and the lack of responsible AI policies.

Enhancing third-party risk management

With the rapid advancement of AI technologies, establishing thorough third-party risk management policies is crucial. But, there is a lack of a standardized approach for evaluating and integrating third-party tools. This highlights the need for mechanisms that ease ongoing evaluation of these tools.

Addressing organizational and cultural barriers

The preference for short-term achievements often leads to inadequate risk mitigation efforts. It also overlooks potential reputational harm. Organizations can also struggle to create environments that encourage individuals to voice concerns about AI systems. Challenges such as stakeholder misalignment, bureaucratic processes, and unclear ownership further impede proactive responsible AI practices.

Tackling technical and measurement challenges

Traditional performance indicators like revenue and click-through rates fall short of capturing responsible AI success. Instead, a deeper dive into understanding biases and developing tailored mitigation strategies is essential. Using tools to explore bias sources and check fairness is critical for informed decision-making.

Proactively creating responsible AI initiatives

Moving to a proactive stance on responsible AI requires organizations to articulate their responsible business missions clearly. Regular monitoring and transparent communication are vital for effective risk management.

What’s next for responsible AI

As we delve deeper into AI’s capabilities and its potential to reshape our world, the imperative for responsible oversight of AI technologies grows clearer. Responsible AI primarily aims to harness AI’s capabilities to enrich our lives and benefit society as a whole.

You need to adhere to established principles and regulations. Next, it is important to leverage diverse and high-quality data when developing responsible AI. Ensuring the data that trains AI systems is unbiased, and representative of all intended users can boost system effectiveness.

Organizations must focus on these values at every level to advocate for responsible AI and eradicate bias. This starts with leadership embedding ethical AI practices into the organization’s core. This ethos should permeate every aspect of the organization’s culture.

Encouraging cross-functional collaboration is key to identifying and addressing AI systems’ blind spots. These may not become clear until unexpected risks emerge. Adopting strategies that incorporate a variety of perspectives can help reduce these drawbacks.

Furthermore, you need to integrate ethical considerations throughout a product’s development cycle. This encompasses scrutinizing all data processing phases. These phases include collection, annotation, assessment of relevance, and validation, to prevent bias. A focus on the diversity, volume, and accurate representation of data is also essential. This is important for delivering responsible AI solutions that stand the test of time.